LucidDbDataStorageAndAccess

Contents |

Introduction

This page describes the portions of the LucidDB architecture that relate to data storage and access. This includes the underlying physical and logical storage mechanisms, the structure of LucidDB tables and indexes, and how those tables and indexes are written to and read.

This page assumes that the reader has a high-level understanding of LucidDB column store tables and bitmap indexes. See http://www.luciddb.org/arch.html for the necessary background information.

Data Storage

Physical Storage

Data in LucidDB is stored using 32K pages in an operating system file named db.dat. Pages are allocated from that underlying random-access file. When LucidDB is first initialized 2000 pages, equivalent to 62MB, are allocated for that file. The first two pages are reserved for storing system state (e.g., checkpoint information). The two pages store identical information. By having two pages and writing them out synchronously during checkpoints, this ensures that LucidDB will always have at least one good copy of the page, in case of a system crash while in the middle of writing out one of the two pages.

The remaining pages are available for storing user-data, although there are also metadata pages sprinkled in between those user pages. As the database grows, additional new pages are allocated, by default, in blocks of 1000 pages (31MB). That value is configurable. Once additional database pages are allocated, the size of the underlying file never shrinks, even if the pages are not being used.

Other *.dat files are also created by LucidDB for storing temporary data during query processing and for transaction logging and recovery.

Pages are accessed randomly by a unique blockId. This blockId, in turn, maps to an offset within the physical file. The pages are read into a buffer pool. The number of pages in the buffer pool is configurable and defaults to 5000 pages (~160MB). Hash buckets are used to efficiently locate pages in the buffer pool. Dirty pages are flushed to disk by one of the following:

- During checkpoints that occur on transaction commits, all outstanding dirty pages modified by the current transaction are flushed to disk.

- If the buffer pool fills up and if a page is not currently being accessed, it can potentially be victimized, resulting in it being flushed before it's removed from the buffer pool. Pages are victimized using a 2Q victimization policy.

- During data loads, when data and index pages fill up, those pages will no longer be written to and therefore are flushed asynchronously, to minimize the number of dirty pages that will need to be flushed during checkpoints.

- A lazy page writer periodically flushes dirty pages to disk when a certain percentage of the buffer pool is dirty.

See LucidDbPerformanceTuning#Background for further details on the LucidDB buffer pool and I/O scheduling.

Logical Storage

Pages are logically stored in what are known in LucidDB as segments. Pages within a segment are logically identified using pageIds. The underlying segments therefore provide a mapping from the logical pageId to the corresponding physical blockId.

LucidDB utilizes several segments to map logical pages. These segments provide the following capabilities:

- Allows pageIds to be numbered sequentially from 0 to N

- Allows pages to be randomly allocated and deallocated

- Allows pages to be versioned to support point-in-time reads

- Allows pages to be recovered during database crashes

See Segment Library for a more detailed description of LucidDB segments.

Column Store Tables

Clusters

All LucidDB tables are column store tables. A column store table consists of a set of clusters. A cluster denotes the granularity by which you are vertically partitioning the columns within a table. By default, each column maps to a single cluster. This is likely the most typical usage. However, it is possible to associate multiple columns with a single cluster. A single cluster page, therefore, stores the values for a specific set of rids (short for row ids), for all columns in that cluster.

As noted above, when loading data into clusters, once a cluster page fills up, rather than waiting for an explicit checkpoint, that cluster page is immediately written to disk asynchronously.

Each cluster is loaded in bulk, independent of the other clusters in the table.

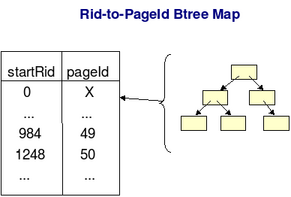

Rid-to-PageId BTree Map

To allow LucidDB to quickly access a column value associated with a specified rid value, each cluster also has associated with it a btree index. The btree index maps rid values to pageIds. The rid values correspond to the first rid value stored on each page within a cluster, and the cluster pages are identified by their pageIds. E.g., if we're trying to access rid 1000, and the btree indicates that cluster pageId 50's first rid value is 1248, while cluster pageId 49's first rid value is 984, then we know that we want pageId 49. Note that in order to locate that desired entry in the btree, a greatest lower bound (as opposed to a least upper bound) search on key "1000" is done.

Each cluster is uniquely identified by the pageId of the root page in this btree index.

Compressed Data Storage

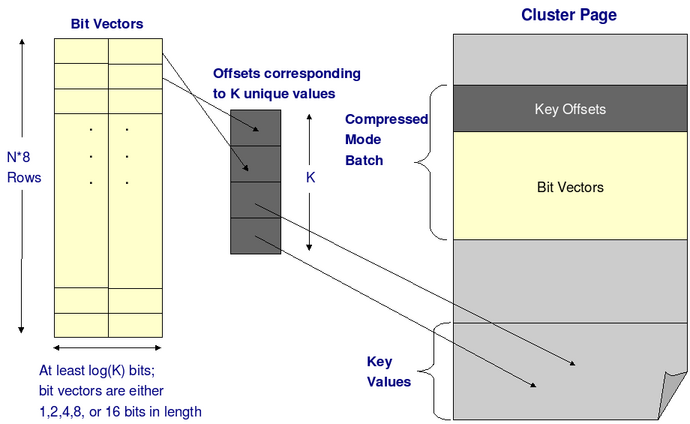

Within a cluster page, column values, by default, are stored in a compressed format, which allows LucidDB to minimize storage requirements. The idea here is instead of storing each column value for every rid value on a page, we instead store just the unique column values. We then associate with each column value a bit-encoded vector. For example, if you have 50 unique values, corresponding to US states, you can encode those values using a 6-bit vector. However, to make access to these bit encodings more efficient, at the expense of using slightly more space, we only encode the bits using up to two vectors that contain either 1, 2, 4, 8, or 16 bits. So, in this example, we would use a 4-bit vector along with a 2-bit vector to represent the 50 values. If we needed 7 bits to represent a set of unique values, we would just use a single 8-bit vector.

Batches

Within a single page, rids associated with each column in a cluster are stored in units known as batches. Therefore, the number of batches on a page is a multiple of the number of columns in a cluster. Each batch within a page shares the same set of unique column values associated with that page. So, in the case where a page is fully compressed, the unique column values stored on that page represent the unique values across all columns within that cluster, for that page.

Unless the batch is on the very last page and corresponds to the last set of batches for the columns in a cluster, the number of rids stored in a batch is always a multiple of 8. This is necessary for two optimizations that utilize the compressed data format described in the previous section. These optimizations will be described further below.

Non-compressed Batches

When constructing a batch, LucidDB assumes that the data will be stored using the compressed format described earlier. However, if the values to be stored within a batch contain very few duplicates, then the additional space you need to store the mapping from bit encodings to column values may outweigh the savings you get from storing only unique column values. In that case, LucidDB will revert to one of two other batch storage modes -- either fixed or variable. In the case of the former, the data values are always stored in fixed-length records, regardless of the actual size of the data. This avoids having to store additional offset values to locate each value. In both cases, the column values within the batch are stored multiple times if there are duplicate values.

Once LucidDB has decided to switch a batch from compressed to either fixed or variable mode, it assumes that the next group of batches for a particular column within that cluster also have similar data characteristics and therefore, it will continue to use that same storage format for that column. It will switch back to making a decision on the fly after it's written out a group of 20 batches.

Additional Compression

Further compression is achieved by stripping leading zeros from column types that map to 8-byte integers. This includes SQL types like BIGINT, DATE, TIME, and DECIMAL. For example, if you need to store the value 127, rather than storing this using 8 bytes, we can instead store it as a variable-length data value that consists of a single byte.

We only do this optimization for 8-byte integers because that's where you get the most benefits, as there is additional processing cost associated with doing this type of compression/de-compression.

Bitmap Indexes

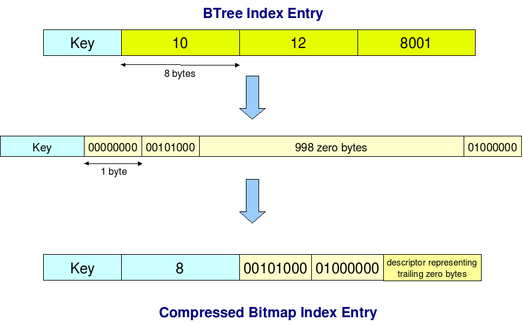

Compressed Bitmaps

Indexes created on column store tables are bitmap indexes. Bitmap indexes are like btree indexes except instead of storing a list of rids matching each key value in the leaf pages, the lists of rids are replaced with compressed bitmaps representing those rids. A bitmap consists of a series of bytes, where each bit within a byte corresponds to a rid. If the bit is set, then that indicates that the corresponding key value contains that rid. Compression is achieved by stripping off bytes that contain no set bits. A descriptor within each bitmap entry keeps track of these stripped-off bytes.

In order to determine where the bitmap starts, the entry also contains the initial rid value corresponding to the bytes, rounded down to the nearest 8-byte boundary. That initial rid value is actually part of the index key. It's appended to the end of the user-specified index keys. Since there is a limit on the size of a bitmap entry, it is possible to have multiple bitmap entries corresponding to a key value if there are a large number of rows containing that key value. By having the rid as part of the index key, it distinguishes those entries and allows them to be stored in rid order. The rid key is also utilized during certain types of searches, to be described later.

For example, if a key value matches rids 10, 12, and 8001, the compressed bitmap for that entry includes a starting rid value of 8 and 2 bytes representing rid values. The first byte has bits 2 and 5 set, representing rids 10 and 12, and the second byte has bit 1 set, representing rid 8001. The 998 zero bytes in between 16 and 8000 are represented in a descriptor, so LucidDB knows that there is that gap in between the 2 bytes.

Index Builds

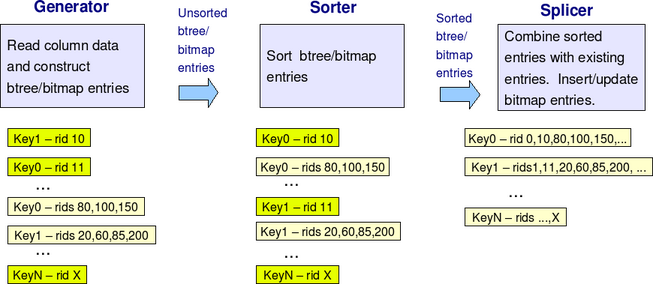

Bitmap indexes are built in bulk after the corresponding table data has been loaded. The build consists of three phases:

- A generator phase during which column data corresponding to newly inserted data is read and individual btree entries corresponding to each key and rid value are built.

- A sorter phase during which the btree entries constructed in step 1 are sorted on the index key and rid values in each entry.

- A splicer phase during which the sorted btree entries are combined together and inserted into the index.

When a column store batch is stored using the compressed data format, LucidDB optimizes step 1 by taking advantage of that format. Since that storage format already tells us, for a specific data page, the rid values corresponding to each unique key value on that page, rather than creating individual key/rid btree entries, we can instead utilize that information to do early construction of bitmap entries. Hence, that's one reason why batches within a cluster page must contain multiples of 8 rids.

During the splicer phase, if an index already contains data, the newly constructed entries are also combined with the existing entries, replacing the original entries.

Unique Key Values

If a bitmap entry consists of a single rid value, then rather than representing that rid using a compressed bitmap, the entry degenerates to a standard btree entry. Therefore, a unique index looks identical to a standard btree.

Page Versioning

LucidDB implements concurrency control by versioning pages. Any operation, whether DML or DDL, that modifies a page will result in the page being versioned.

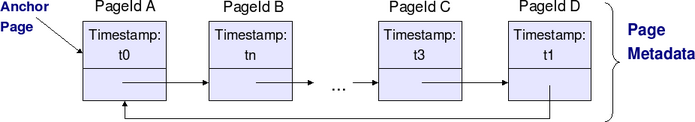

The original version of a page is known as an anchor page. As a page is versioned, new versions of the page are chained from its corresponding anchor page. The newest page is always chained directly from the anchor. Older pages follow it in the chain, in decreasing timestamp order, eventually looping back to the anchor. The page chain and timestamp information are stored in metadata entries, one per page. The timestamp associated with each page corresponds to when the transaction that created and/or modified the page committed.

When ALTER SYSTEM DEALLOCATE OLD is run, old page versions no longer in use can be deallocated, provided they do not correspond to anchor pages. Anchor pages cannot be deallocated because btree and bitmap index entries contain references to anchor pages. If LucidDB were to deallocate those anchor pages, it would then have to go back and update all index entries referencing those anchor pages.

In the case where a page chain reduces to an anchor page followed by the latest version of a page, you might think that LucidDB can eliminate this page chain by replacing the contents of the anchor page with the contents of the latest page and then deallocating the latest page. However, this is not feasible because if other transactions are accessing that latest page while LucidDB is trying to deallocate it, the deallocation will fail.

ALTER SYSTEM DEALLOCATE OLD can be run at any time. It is very efficient because it only accesses and updates page metadata (no data pages are accessed). As will be described in later sections, this command should be run after table rebuilds and truncates, which cause pages to no longer be needed. This is important because as noted earlier, db.dat is a grow-only file, and pages that are no longer in use cannot be reallocated for other uses, until they have been reclaimed by ALTER SYSTEM DEALLOCATE OLD.

As noted earlier, LucidDB implements segments that provide various forms of page mapping. One such segment provides the abstraction that allows LucidDB to reference the appropriate physical page corresponding to the page snapshot that should be read by each transaction. This particular segment will walk through a page's version chain, starting at the page linked from the anchor, until it finds a page whose timestamp is smaller than the timestamp at the time the transaction reading the page was initiated. Due to the order in which pages are chained from the anchor, in the most common case where you want to access the latest version of a page, if the page is versioned, LucidDB only needs to examine two metadata entries -- the anchor's and the one following the anchor. For each transaction, that page mapping is also cached, so the metadata only needs to be examined the very first time a page is accessed during a transaction.

The following diagram illustrates the metadata for a page chain.

A request to read pageId A at time t(n+1) would read pageId B. A request to read pageId A at time t2 would read pageId D.

If ALTER SYSTEM DEALLOCATE OLD is run and the above page chain exists, if the oldest active transaction started at time t2, then no pages can be deallocated because that transaction still needs to read pageId D, and pageId A can never be deallocated because it's the anchor. If the deallocation is done when the oldest active transaction started at time t(n+1), then it is possible to deallocate all pages except pageIds A and B. As noted earlier, in this case, LucidDB cannot replace the contents of pageId A with the contents of pageId B, in the hope of removing the page chain, because that would require LucidDB to deallocate pageId B, which other transactions may still be referencing.

Note that the semantics of page reclamation differs when warehouse labels are being used. See LucidDbWarehouseLabels#Space_Reclamation for a discussion of the differences.

Updating and Deleting Data

Deletes

LucidDB implements lazy deletes. In other words, rows are not physically removed from data and index pages when deleted. Instead, the deleted rids are recorded in an internal deletion index associated with each table. That deletion index is actually a bitmap index. Using a bitmap index minimizes the cost of storing the deleted rids. The index contains a single key, which represents the starting rid value, rounded down to the nearest 8-byte boundary, for each bitmap entry. The combined bits in the bitmap entries, therefore, represent the deleted rids.

Lazy deletes avoid the complexities associated with having to re-compress the data on a page when a row is deleted. Remember, one of the fundamental characteristics of a batch is that it stores a multiple of 8 number of rows.

A DELETE statement executes in two parts. The first part determines the subset of rows that need to be deleted, as specified by the where clause in the statement. LucidDB will retrieve the set of rids corresponding to these deleted rows, and sort them. The second part takes the sorted rids and inserts them in bulk into the deletion index, using the same splicer that's used for loading user-created indexes. When the splicer modifies the existing bitmap entries in the deletion index, it deletes the original entry and replaces it with the new one containing the additional deleted rids. Note also that the index page update is not done in-place, but instead a new copy of the page is created, as described earlier.

The splicer requires the rid input to be sorted. A side effect of doing the sort is that it effectively buffers all of the rid values from the source table before any data is inserted into the deletion index. Therefore, even though the first part of the delete is reading from the deletion index to filter out rids that are already deleted (see #Reading Data), it does not conflict with the second part of the delete, which is adding rids to the deletion index. Note though that even without the buffering provided by the sort, the conflict is avoided because of page versioning.

Upserts

Basic Operation

Upserts allow both inserts and updates to be executed within a single statement. LucidDB supports upsert by implementing the SQL2003 standard MERGE statement. A MERGE statement consists of:

- A target table

- A source, which can be a view

- An ON clause that correlates between the target and source using keys; these keys are generally unique

- An INSERT sub-statement that specifies the values for new rows

- An UPDATE sub-statement that specifies the values for updated rows

The source view can include UDX calls. UDXs are user-defined routines that return rows as output. They can be used to perform transformations on a set of input rows, or to produce a set of rows given some seed input. Therefore, think of the UDX call as just another table in the view definition.

In order to determine which source rows correspond to new rows that need to be inserted vs existing target rows that need to be updated, LucidDB executes a left outer join between the source and the target. The join query computes the column values for the insert and update rows, and includes a selection of the rid column from the target table. Hence, a null rid in the join result represents a new source row.

Updates are implemented as deletes followed by inserts. LucidDB combines the new rows resulting from the updates with the actual new rows into a larger set of rows that are to be inserted into the table. The original rows that are updated are deleted from the table.

Upserts like all LucidDB DML operations run in single-statement transactions that either run successfully to completion or are rolled back on errors. However, it is possible to specify that for any single statement, up to a certain number of errors, like unique constraint violations, should be ignored. In that case, rather than aborting on an error, the error is recorded in a log file, and the statement runs to completion, provided the error limit isn't reached. See LucidDbErrorHandling for details on how this works.

Execution Flow

Pulling these different pieces together, here's a high-level breakdown of what's actually occurring under the covers during execution of an upsert statement.

- Execute an outer join between the source and target. Note that even though the upsert is reading from the target table and will later be writing to it, there's no need to buffer the result of this outer join because upserts take advantage of page versioning. Using page versioning, the source for the upsert can read a snapshot of the target table as of the start of the statement, ignoring modifications that are made concurrently by the rest of the statement.

- Separate out the rows corresponding to update rows from the rows corresponding to new insert rows.

- Bulk insert the rows corresponding to updated rows followed by rows corresponding to insert rows. If the rows are being inserted into existing pages, then those pages are versioned.

- Bulk delete rows corresponding to the original rows that are being updated. As described in the previous section, this entails inserting the deleted rids into the internal, deletion index.

- Insert new entries into the target table's bitmap indexes corresponding to the new rows, as described in the Index Build section. Again, if the entries are being inserted into existing pages, then those pages are versioned. In order to determine whether or not there is a unique constraint violation, the deletion index is consulted.

- If any unique constraint violations were detected in the previous step and errors are to be ignored, then delete those rows by inserting their rids into the deletion index.

The steps above are all sequential, except for 3 and 4, which can occur concurrently.

By deleting the rows in step 4 before inserting new key values in step 5, this addresses the classic problem of executing an update on a unique key column, where you increment the column values by 1.

Note that the order of the inserts in step 3 is important because if a table contains unique constraints, we want to allow rows that update columns, which excludes unique constraint columns, to occur first. Otherwise, any new rows that create constraint violations will prevent those updates from occurring.

Perhaps, an example will better illustrate the scenario in which this ordering is important. Suppose you have a table T with columns a and b. There is a unique constraint on column a. One of the rows currently in T is (1, 1). You execute an upsert statement that consists of an update to row (1, 1), where you update column b to the value 2. That upsert statement also includes an insert of a new row (1, 0). Assuming you've setup execution to prevent unique constraint violations from aborting statements, if the insert of (1, 0) occurs first, then that's not detected as a unique constraint violation because due to the update of that same row, the rid corresponding to that row has been inserted into the deletion index. So, it's assumed that the insert of (1, 0) is replacing the original (1, 1) row. However, having inserted (1, 0), there is now an entry in the unique index for key value 1. Consequently, the insert of the index entry corresponding to row (1, 2) will fail. If we reverse the order of the inserts, the insert of row (1, 2) succeeds, and the insert of (1, 0) causes a unique constraint violation, which is the more desirable effect.

See LucidDbUniqueConstraints for further details on the interactions between unique constraints and upserts, and LucidDbErrorHandling for information on what happens when errors are encountered.

Optimizations

Filtering Non-Updated Rows

One optimization LucidDB performs is if all updated columns in a row are identical to their original values, then the update of that row is skipped. Note, however, that as long as any column in a table is updated, in general, this results in an entire new row, which means that new values are inserted into all clusters.

Replacement of Updated Columns

There is an exception to the statement that LucidDB creates entire new rows for upserts. For certain types of upserts, LucidDB executes the upsert by replacing entire clusters rather than deleting and inserting individual rows. Therefore, only the columns that are updated are affected. This occurs if the following conditions are met.

- The upsert is an update-only upsert (i.e., does not contain a WHEN NOT MATCHED clause).

- The percentage of the columns updated does not exceed a threshold value.

- The percentage of rows updated exceeds a second threshold value that's dependent on the percentage of columns updated.

- Only clusters containing single columns are being updated.

- The upsert contains an ON condition where the keys from the source are unique.

Savings, in both time and space, occur with this optimization because:

- LucidDB avoids having to read and update columns that aren't updated in the upsert statement.

- LucidDB avoids having to delete the original rows that are updated by the statement.

- Inserts into btree indexes are more efficient because the values are being inserted in strictly monotonic order.

But the tradeoff is there will be additional cost because we have to re-insert all values into columns that are updated, not just the subset of rows that are affected by the statement, as well as rebuilding all indexes that include any of these updated columns. Therefore, that is why conditions 2 and 3 are necessary for this optimization to be beneficial.

Updates Written As Upserts

LucidDB did not support the UPDATE statement until version 0.8.1, but an UPDATE can be expressed as an UPSERT in any version. Regardless of whether the explicit UPDATE syntax is used vs the MERGE alternative, the optimizations performed on the statement are the same. If LucidDB were to process these statements as described earlier, it would end up doing a join between the source and target, i.e., in this case, a self-join, to locate the candidate rows for the upsert. LucidDB avoids this by recognizing these special case upserts. Instead of the self-join, it simply selects the candidate rows from the target table.

Note that conditions 1 and 5 from the replacement of updated columns optimization are pre-requisites for this optimization. Therefore, if conditions 2-4 are also met, then both optimizations can be utilized.

Unique Indexes

Another minor optimization LucidDB performs is during step 5 from the previous section, if the index is a unique one, and the new index entry corresponds to an updated row, rather than inserting a new entry into the index, the existing one is updated. This way, LucidDB avoids increasing the size of the unique index, when only updates are being executed.

Upserts and Page Versioning

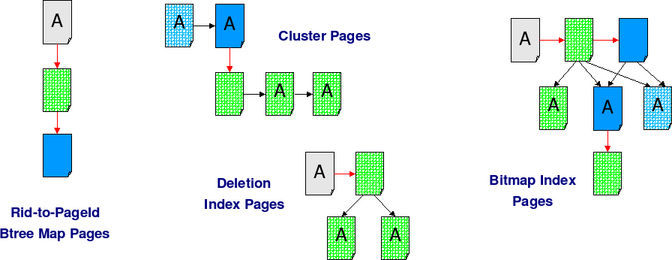

The following diagram illustrates the different page versions that are created after an initial load and a subsequent incremental load.

- The grey pages are the original pages from when the table is first created. These are empty pages.

- The blue pages are the pages created and/or versioned by the initial load.

- For the rid-to-pageId btree map, a new page is versioned from the original, empty root page.

- Two new cluster pages are created by the load.

- No deletion index pages are versioned because the initial load does no updates.

- The bitmap index creates a new version of the empty root, and creates two new leaf pages.

- The green pages are the pages created and/or versioned by the incremental load.

- The load of the cluster results in the last cluster page being versioned, and two new additional cluster pages being created.

- The deletion index versions the root and creates two new leaf pages.

- One of the leaf pages in the bitmap index is versioned, and a new leaf page is created in front of the existing ones. The other existing leaf page is unmodified.

- All anchor pages are marked with the letter "A".

- The latest version of each page is marked with hatching.

- Red arrows indicate page version chains.

Truncate Table

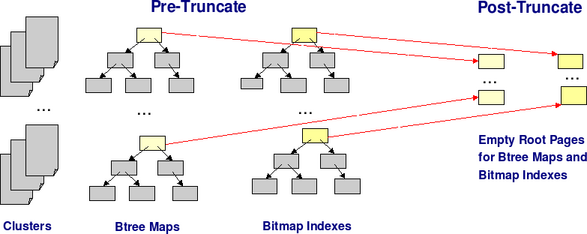

One way of removing all rows from a table is to execute a DELETE statement without specifying a WHERE clause. A more efficient way is to execute a TRUNCATE TABLE statement. Unlike a delete, which operates at the row level, a truncate operates at the page level, updating the metadata for those pages. A truncate creates new versions of the root pages for the btrees associated with each cluster in the table, as well as for the table's bitmap indexes. The new versions of those root pages contain no entries, effectively removing all rows from the table.

Because of page versioning, it's possible to read from a table while it's being truncated. Once the truncate table transaction commits, new transactions will see an empty table. Queries that started before the truncate was initiated and are still running after the truncate commits, will continue to see the pre-truncated table.

Because LucidDB provides this level of concurrency, the pages corresponding to the pre-truncated table are not automatically deallocated as a result of the truncate. With the exception of the original btree and bitmap index root pages, at the end of the truncate, the pages corresponding to the pre-truncated table are marked as being "old". Provided those pages are no longer being referenced by any active transactions, the pages will be deallocated and available for reuse once ALTER SYSTEM DEALLOCATE OLD is run. The original btree root pages cannot be deallocated because they are recorded in the system catalogs and are used to identify a table's clusters and bitmap indexes.

The following diagrams illustrates what the pages of a table look like pre and post truncate. The grey pages are the pages, post-truncate, that are candidates for being deallocated by ALTER SYSTEM DEALLOCATE OLD. The red arrows indicate the page chains that result from versioning the original root pages to new, empty root pages.

Table Maintenance

Table Rebuild

By now, the astute reader has probably come to realize that due to the way LucidDB DML is architected, the following overhead are incurred during data access:

- As rows are deleted (and updated since updates are deletes followed by inserts), the number of entries in the deletion index increases. This is minimized by the fact that the deletion index is a bitmap index.

- As rows are deleted (and updated), the space occupied by a table increases due to keeping around the old, deleted rows.

- As pages are updated, the version chains associated with those pages become longer. This is exacerbated if your transactions are small and affect very few rows.

The ALTER TABLE REBUILD statement alleviates these issues.

The way ALTER TABLE REBUILD works is LucidDB first creates an identical, empty copy of the table, matching all of the clusters and indexes from the original table. Then it internally issues an insert statement, selecting data from the original table. This effectively bulk loads the new copy of the table. Similar to a user-issued select, the selected data excludes deleted rows. Again, due to page versioning, it's possible to rebuild a table while it's being accessed by other read transactions.

When creating a new copy of the original table, the root pages of the btree maps and bitmap indexes for the table are versioned, similar to what's done in the case of table truncate. However, in this case, the new versions of those pages will contain the newly loaded data. Also, as in the case of truncate, except for the original root pages, the pages corresponding to the original table are marked as being old once the copy into the new table has completed. These old pages, including any pages versioned from those pages, can then be deallocated once they're no longer being accessed by other transactions, by running ALTER SYSTEM DEALLOCATE OLD.

Therefore, in summary, the benefits of executing a rebuild are:

- The deletion index is emptied.

- The deleted rows are removed from the new table, thereby reducing the total number of pages that need to be read to access the entire table. Note also that as part of the rebuild, the data is re-compressed within the new data pages.

- Page chains are eliminated, except in the case of root index pages.

For these reasons, it is a good idea to run rebuild on a periodic basis, if your incremental loads are modifiying existing data in tables. ALTER SYSTEM DEALLOCATE OLD should be run afterwards to reclaim the pages corresponding to the original tables prior to the rebuild. This is important because in the worst case, a rebuild can double the number of pages used by a table.

Another reason you may want to run rebuild is if one of your indexes is corrupted. The rebuild may be able to correct the corruption. Generally, you'll know if you have a corrupted index, if your load aborts with a Duplicate key detected error. This error is an internal one, and normally you should never see it. Note that it's not the same as the error you get if you have a legitimate unique constraint violation due to duplicate data in primary key or unique constraint columns.

Corrupted indexes can also be detected through an ANALYZE TABLE. See below for further details.

Table Rebuild and Page Versioning

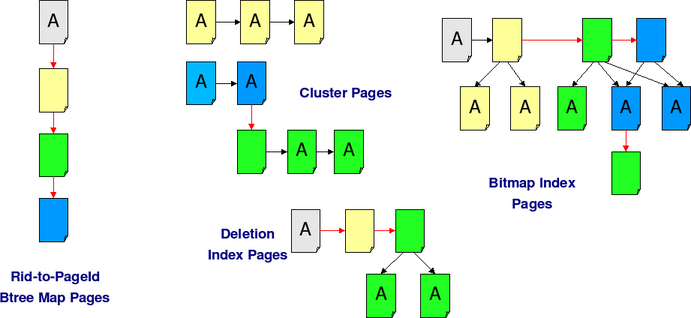

The following diagram illustrates the impact of page versioning on a table rebuild.

- The yellow pages are the latest page versions, i.e., those created by the rebuild.

- The yellow root page in the rid-to-pageId btree map only maps the new, yellow cluster pages.

- The yellow root page in the deletion index is empty.

- The grey pages are the original root pages.

- The green and blue pages are the pages created by prior loads. See the Upserts and Page Versioning section.

- The green and blue pages can be deallocated by ALTER SYSTEM DEALLOCATE OLD, provided no active transactions are referencing them.

- Red arrows indicate page version chains.

- Anchor pages are marked with the letter "A".

Analyze Table

Key components of the LucidDB optimizer are cost-based, specifically the components that decide join ordering and which indexes to use when processing filters. In order to come up with realistic cost estimates to make reasonable choices, the optimizer relies on data statistics. These statistics are gathered using the ANALYZE TABLE command and are:

- the number of rows in the table

- the number of distinct values in each column

- data distributions for each column

- the number of pages in each cluster and each bitmap index, including the internal deletion index

The column level statistics are determined by executing GROUP BY queries on each columns being analyzed. For example,

SELECT col, COUNT(*) FROM tab GROUP BY col;

To execute these queries, LucidDB scans the entire table, which results in exact statistics. However, the optimizer generally does not require stats to be exact; they just need to be in the ballpark to prevent the optimizer from choosing bad alternatives. ANALYZE TABLE has been extended to support sampling. In other words, LucidDB can estimate column statistics by reading a subset of the table. This can help significantly for very large tables. Sampling is achieved by modifying the GROUP BY queries and then using the sample or information gleaned from indexes and constraints to aid in estimating the distinct value count. The GROUP BY queries become, for example,

SELECT col, COUNT(*) FROM tab TABLESAMPLE SYSTEM(p) GROUP BY col;

Note that the sampling percentage p is based on the number of rows in the table, unless explicitly specified in the ANALYZE TABLE statement. Statistics estimation uses SYSTEM sampling, which reads 10 equal-sized, equidistant blocks of values from the column.

If analyze is run on only a subset of columns in the table, then the GROUP BY queries are only executed on the column subset.

The number of pages is determined by walking through each page in each cluster/bitmap index. Note that the page counts do not reflect versioning. In other words, if there are n versions of a single page in a cluster, that page still only contributes a count of 1 to the overall page count for the cluster. When estimating statistics, the btree walk is also used to count distinct values in a column when it is appropriate. For example, if col is the first column in an index (with 1 or more columns), the btree walk for the index is configured to return the number of values found in col, which must then be adjusted for the number of deleted rows.

As part of walking through each page, LucidDB also performs sanity checks on the underlying btree indexes. Therefore, a side effect of ANALYZE TABLE is it will detect if you have a corrupted index. You'll know that your index is corrupted if you see a BTreeVerifier error in the server log. In the event that you do have a corrupted index, the first thing you should try is to run ALTER TABLE REBUILD on the table. If that doesn't help, then you may have to restore your data from a backup.

Data statistics are also used by the LucidDB resource governor to decide how much memory to assign to each individual operation within a query. Since hash joins, hash aggregation, and sorting are memory-intensive operations, the resource governor will assign additional memory to these operations, if it's available. In the absence of stats, the resource governor will equally assign the additional memory across all of these operations. Hence, it could end up assigning more memory than necessary to an operation that doesn't need the additional memory, at the expense of an operation that could have used it instead.

Whenever inserts or updates are made to a table that dramatically affect the column cardinalities, data distributions, and/or page counts, ANALYZE TABLE should be rerun. In the absence of column stats, the optimizer assumes the data within columns are uniformly distributed. Therefore, if that's not the case, it's important that you run ANALYZE TABLE. This is especially important for columns that are joined and/or grouped, and to a lesser extent, filtered. This should be done either on the entire table or a subset of columns, depending on the changes that have occurred. Note that it isn't necessary to rerun ANALYZE TABLE if the only statistic that has dramatically changed is the table row count. That's because this statistic is maintained as DML statements are executed. Therefore, this value is always up-to-date, regardless of whether analyze has been run.

It is best to run ANALYZE TABLE on a pro-active basis, based on what has occurred after a data load. However, you may find it necessary to run ANALYZE after the fact if your queries are performing poorly, and you suspect that the optimizer has chosen a non-optimal join ordering. See LucidDbJoinOptimization#Cost Functions for details on how statistics are used by the optimizer to determine optimal join orderings.

In some cases, a poor join ordering can even result in errors that prevent the query from executing to completion. You'll see these as hash recursion errors. If you do encounter this error, then you should run ANALYZE TABLE on the tables referenced in the query.

Running ALTER TABLE REBUILD does not update the statistics associated with the table. They still reflect the statistics as of the last time ANALYZE TABLE was run. Likewise, for TRUNCATE TABLE, except for the row count, which will reflect the now empty table.

ANALYZE TABLE cannot be run if a DML statement is currently in progress on that table. This ensures that the statistics are consistent across the table.

Alter Table Add Column

Adding a column to an existing table is as simple as creating a new empty cluster and inserting one value per existing row into the new cluster, where the values are determined by the new column's DEFAULT clause or identity column sequence generator. No modifications to the clusters for existing columns are necessary. Note that if a table has deleted rows, ALTER TABLE ADD COLUMN will create corresponding entries (which will never be seen by users) in the new cluster (otherwise its values wouldn't correctly line up with the rows in the existing columns). Validator visibility rules ensure that the new column is not visible to queries until after its creation has successfully completed, so adding a column can be performed at any time without blocking query execution.

Data Access

Row Scans

Reading Data

One of the main benefits of the column store data storage is it reduces the data that needs to be read during data selection. Instead of reading all columns in a row, the LucidDB optimizer detects if a column is not being referenced in a query and avoids reading those clusters that do not contain referenced columns. The columns that are referenced are individually read, and then their values are combined together to form rows.

In the case where a table is read without the use of bitmap indexes, i.e., a full table scan, LucidDB reads the column values for all rids in the table. It does this by sequentially reading each rid value, starting at rid 0. However, because LucidDB supports lazy deletes, some of these rids may correspond to deleted rows. In that case, LucidDB bypasses these deleted rids and avoids reading them altogether. The rids corresponding to the deleted rows are passed in as input into the full table row scan. That input is represented using compressed bitmaps to minimize its size.

If the optimizer decides that bitmap indexes should be used to reduce the rids that the row scan needs to read, then that subset of rid values is passed in as input into the row scan. The rids are in sort order to ensure locality of data reference. As with the deleted input rids, they're also passed in using compressed bitmaps. Any deleted rids are subtracted from this input so in the case of indexed row scans, the row scan doesn't need to do any additional processing to avoid reading deleted rows. Details on how the bitmap indexes are read is discussed in the Indexed Scans section.

Filtering

In addition to reading column data, LucidDB row scan also does simple data filtering. We refer to this as residual filtering. The filters need to be sargable. This basically means that the filters need to be one of the following:

- an equality filter comparing a column to a constant, e.g., col = 10

- a range filter on a column, with either lower or upper bounds, or both, and constant bounds; e.g., col BETWEEN 20 and 30

- an IN list filter on a column; e.g., col IN (40,50,60)

The same column can be referenced in these filters, provided they are OR'd together. For example,

col = 10 OR col = 20

Different columns can be referenced in these filters, provided they're AND'd together. For example,

col1 IN (40,50,60) AND (col2 < 0 OR col2 > 100)

The row scan will apply these filters and avoid returning non-qualifying rows in its output. If there are residual filters on multiple columns, when a column does not qualify its filter, then LucidDB skips reading the remaining unread columns for that row.

In the case where a batch of rows is stored in compressed mode, LucidDB utilizes this to do fast data filtering. The row scan first applies the residual filters, applicable to that column, on each unique value in the batch and stores the true/false result in a bitmap. As column values are read for individual rows, rather than re-applying the residual filters, the row scan can simply consult the pre-computed bitmap to decide whether or not the column qualifies. Hence, this is the second reason why batches contain multiples of 8 rows.

Intelligent Pre-Fetch

LucidDB supports pre-fetch of column store cluster pages as well as bitmap index leaf pages.

Without column store pre-fetch, cluster pages would be read on-demand. In other words, a cluster page would not be read until the row scan is ready to process the rid corresponding to that cluster page. With intelligent pre-fetch, the row scan looks ahead at the rids that it will need to read. Using the rid-to-pageId btree map described earlier, the row scan will determine the sequence of pages corresponding to those look-ahead rid values. It will then issue asynchronous read calls to read those pages into the buffer pool. Therefore, by the time the row scan actually needs to process a particular page, it will already be cached in memory.

LucidDB will also automatically detect situations where it does not make sense to incur the additional overhead of tracking which individual pages to selectively pre-fetch. For example, in the case of a full table scan, all cluster pages will need to be read, so LucidDB turns off intelligent pre-fetch and simply sequentially pre-fetches all pages from the column store cluster.

Bitmap index leaf pages are pre-fetched by scanning the interior nodes of the index first to locate the leaf pages corresponding to the specified search key. Knowing which leaf pages need to be read, those pages are pre-fetched so by the time they need to be searched, they're cached in memory. After all leaf pages corresponding to a particular search range have been pre-fetched, the interior nodes are read for the next search range, yielding the next set of leaf pages to pre-fetch.

LucidDB also uses prefetch for all temporary segment access (hash join partitions, external sort runs, and miscellaneous buffering).

Indexed Scans

Individual Index Lookups

Bitmap indexes are searched in the same way as standard btrees. Let's consider the different scenarios.

The simplest is the case where your filters all contain the equality operator, and the number of filters matches the number of keys in your bitmap index. For example, suppose you have an index on columns a and b, and equality filters on both columns as follows:

CREATE INDEX i ON t(a, b); SELECT * FROM t WHERE a = 10 and b = 20;

In this case, a btree search is done on the key values (10,20). The compressed bitmaps corresponding to those key values contain the rids matching that key value pair. In this case, since the rids in each of the bitmap entries are non-overlapping and are in strictly increasing order, they can be passed directly into the row scan, in the same order in which they appear in the index.

The second case is where you have a multi-key index, but you're using an equality filter on only the first part of the key. For example, you have the same index as described above, but a query like:

SELECT * FROM t WHERE a = 10;

In this case, a btree search is done on the partial key (10). Again, there may be multiple entries matching this key value. But unlike the previous case where you're matching on a full key, the rids in these bitmap entries may not be in increasing order. For example, suppose you have have the following rids corresponding to keys (a, b) where a = 10:

| a | b | rids |

|---|---|---|

| 10 | 1 | 5, 7, 20, 100, 300 |

| 10 | 2 | 1, 15, 80, 200 |

| 10 | 3 | 4, 10, 50, 150 |

LucidDB will efficiently combine the matching entries into one or more bitmaps where the rid values in each are in strict sort order. In other words, in the example above, the sequence of rids in the resulting bitmaps will contain the values 1, 4, 5, 7, 10, 15, 20, 50, 80, 100, 150, 200, and 300.

In the case where a bitmap index is used with a range filter such as the following:

SELECT * FROM t WHERE a = 10 and b > 1;

then multiple bitmap entries can match the range of values. Again, these bitmaps need to then be combined together and sorted, similar to the partial key case described above.

Intersecting Multiple Index Lookups

LucidDB can also use multiple indexes to process filters on a table. For example,

CREATE TABLE t(a INT, b int); CREATE INDEX it1 ON t(a); CREATE INDEX it2 ON t(b); ... SELECT * FROM t WHERE a < 20 AND b >= 10;

Because the matching rids from each index are represented as bitmaps, LucidDB can intersect those bitmaps to efficiently find the rids that match all filters applied on the indexes.

As an optimization, if possible, LucidDB will also utilize the bitmaps it has read thus far to skip ahead when reading from the other intersecting indexes. For example, if 3 bitmap indexes need to be intersected, and the first bitmap index returns a bitmap where the initial rid is X, then if there is a closed lower bound on the second index search, LucidDB sets up the search on the second index to read rids >= X. Likewise, if the initial rid read from the second index is Y, and there's also a closed lower bound on that third index search, then the lookup key for that index will position on rids >= Y.

So, in the earlier example where we're intersecting two indexes, assuming the index on column a is read first, if the smallest matching rid from that lookup is 100, then if there are two bitmaps entries in the second index where b = 10, one with an initial rid of 0 and the other with an initial rid of 80, then LucidDB will setup the search to skip that first entry.

Subtracting Deleted Rids

As noted earlier, when doing an index lookup, rids corresponding to deleted rows are subtracted from the rids returned from the index lookups, before those rids are passed in as input into the row scan. Because the deleted rids are stored in a bitmap index, this subtraction operation is efficient. Similar to what's done when intersecting bits, the subtraction also utilizes the rid that's part of the index key to skip past bitmaps that do not overlap with the minuend's bits.

Other Indexing Operations (Future)

Note that the mechanism LucidDB uses to subtract deleted rids, when doing indexed scans, could also be used to process filters like:

SELECT * FROM t WHERE a = 1 AND NOT(b = 2);

An index lookup can be done to find rids where "a = 1". A second index lookup can be done to find rids where "b = 2", and then the rids from that second lookup can be subtracted from the first.

Filters like the following could also be processed using multiple indexes by taking the union of the indexed scan results.

SELECT * FROM t WHERE a = 1 OR b = 2;

Both of these techniques are not currently supported.